Google has been updating its search engine strategies to prove more user friendly and SEO friendly. Google Search console has been the top tool unleashed by Google and it is the redefined version of Web Master Tools. It has the added features in the name of Search Analytics and Links to Your Site for helping SEOs more.

Google’s ‘more help docs’ and ‘support resources’ are useful in fixing the crawling errors in search console.

How to Fix Crawl Errors?

Concentrate on the following factors

Infrastructure

Good habits and practice preventative maintenance and periodic spot checks on crawl errors will help you keep the crawl errors under control. Ignoring such small errors will take a big form in future.

Lay out of crawl errors within search console

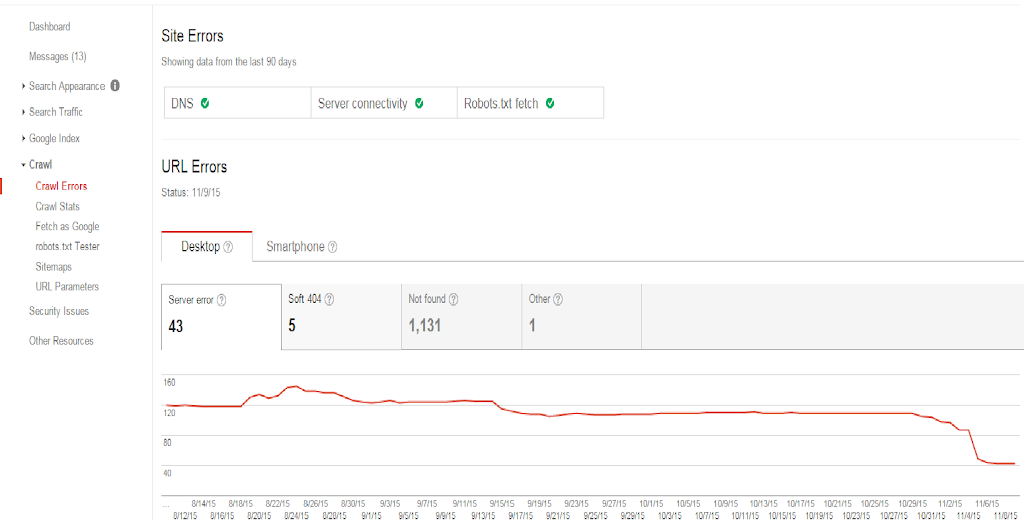

Search console has two divisions: Site errors and URL errors. Site errors are different from page errors. Site errors can be more damaging with their power to damage site’s overall usability.

You can access crawl errors quickly from the dashboard. Your dashboard gives you a preview of the site with three important management tools – crawl errors, search analytics and sitemaps.

More about site errors

Site errors affect the site entirely. Skipping these errors will cause catastrophe to the site. You can find these errors in the Google dashboard for the last 90 days. Checking the site errors every day is good and if you miss a day, you may land in some serious trouble. Setting up alerts will make the matter easy for you.

DNS errors

Domain Name System (DMS) are the foremost issues. Suppose the Googlebot faces DNS error, it will not connect with your site through a DNS look up issue or DNS timeout issue. Fetch and Render, ISUP.me, Web-sniffer.set is some of the tools helping you fix the DNS errors.

Server errors

Server errors make your server take too long a time to respond. This happens when your site gets overloaded with much traffic. ‘Fetch as Google’ is the tool for you to check whether Google returns the content of your homepage without problem. Use ‘Google search console help’ to find specific server errors.

Robots errors

Fixing robots.txt file error is fairly important if you change new content daily. Use a ‘server header checker’ to check whether your file is returning a 404 or 200 error.

URL Errors

URL errors affect specific pages on the site. Search Console will show you URL errors per desktop, smart phone and feature phone. It is better to mark all errors fixed and check back upon them a few days after.

Soft 404, 404 errors are also commonly found on the sites. One more problem is ‘access denied’ and ‘not allowed’. The Screaming Frog SEO Spider helps you fix redirect errors.

Fix crawling errors on time to avoid huge damages to your site.